pyrocko.trace¶

Basic signal processing for seismic waveforms.

Functions

|

Calculate the misfit denominator m and the normalization divisor n according to norm. |

|

Compute biased estimate of the first autocorrelation coefficients. |

|

Successively downsample broken continuous trace data (coroutine). |

|

Successively filter broken continuous trace data (coroutine). |

|

Cross correlation of two traces. |

|

Try to connect traces and remove gaps. |

|

Get approximate amount of cutoff which will be produced by downsampling. |

|

Equalize sampling rates of two traces (reduce higher sampling rate to lower). |

|

Try to undo sampling rate rounding errors. |

|

Merge data samples from multiple traces into a 2D array. |

|

Return the hilbert transform of x of length N. |

|

Homogenize sampling rate, time span, sampling instants, and data type. |

|

Merge network-station-location-channel codes of a pair of traces. |

|

Get data range given traces grouped by selected pattern. |

|

Get time range given traces grouped by selected pattern. |

|

Slow version of |

|

Call |

|

Get range of lags for which |

Check if NumPy's correlate function reveals old behaviour. |

|

|

Affine transform of three-component traces. |

|

Figure out what dependencies project() would produce. |

|

2D rotation of traces. |

|

Rotate traces from ZNE to LQT system. |

|

Rotate traces from NE to RT system. |

|

Check if two traces have the same sampling rate. |

|

Show traces in a snuffler window. |

|

Compute autoregression coefficients using Yule-Walker method. |

Classes

|

Cosine Fader. |

|

Cosine Taper. |

|

Any |

|

Frequency domain Gaussian filter. |

|

Contains misfit setup to be used in |

|

Multiplication of several tapers. |

|

Utility to store channel-specific state in coroutines. |

|

Base class for tapers. |

|

Create new trace object. |

Exceptions

This exception is raised by some |

|

Raised when traces have incompatible sampling rate, time span or data type. |

|

This exception is raised by |

|

This exception is raised by some |

|

This exception is raised by some |

|

This exception is raised by some |

|

This exception is raised by some |

- class Trace(network='', station='STA', location='', channel='', tmin=0.0, tmax=None, deltat=1.0, ydata=None, mtime=None, meta=None, extra='')[source]¶

Bases:

ObjectCreate new trace object.

A

Traceobject represents a single continuous strip of evenly sampled time series data. It is built from a 1D NumPy array containing the data samples and some attributes describing its beginning and ending time, its sampling rate and four string identifiers (its network, station, location and channel code).- Parameters:

network – network code

station – station code

location – location code

channel – channel code

extra – extra code

tmin – system time of first sample in [s]

tmax – system time of last sample in [s] (if set to

Noneit is computed from length ofydata)deltat – sampling interval in [s]

ydata – 1D numpy array with data samples (can be

Nonewhentmaxis notNone)mtime – optional modification time

meta – additional meta information (not used, but maintained by the library)

The length of the network, station, location and channel codes is not resricted by this software, but data formats like SAC, Mini-SEED or GSE have different limits on the lengths of these codes. The codes set here are silently truncated when the trace is stored

- ♦ network¶

str, default:''Deployment/network code (1-8)

- ♦ station¶

str, default:'STA'Station code (1-5)

- ♦ location¶

str, default:''Location code (0-2)

- ♦ channel¶

str, default:''Channel code (3)

- ♦ extra¶

str, default:''Extra/custom code

- ♦ tmin¶

pyrocko.util.get_time_float (

pyrocko.guts.Timestamp), default:str_to_time('1970-01-01 00:00:00')

- ♦ tmax¶

pyrocko.util.get_time_float (

pyrocko.guts.Timestamp)

- ♦ deltat¶

float, default:1.0

- ♦ ydata¶

numpy.ndarray (

pyrocko.guts_array.Array), optional

- ♦ mtime¶

pyrocko.util.get_time_float (

pyrocko.guts.Timestamp), optional

- interpolate(t, clip=False)[source]¶

Value of trace between supporting points through linear interpolation.

- Parameters:

t – time instant

clip – whether to clip indices to trace ends

- add(other, interpolate=True, left=0.0, right=0.0)[source]¶

Add values of other trace (self += other).

Add values of

othertrace to the values ofself, where it intersects withother. This method does not change the extent ofself. IfinterpolateisTrue(the default), the values ofotherto be added are interpolated at sampling instants ofself. Linear interpolation is performed. In this case the sampling rate ofothermust be equal to or lower than that ofself. IfinterpolateisFalse, the sampling rates of the two traces must match.

- mult(other, interpolate=True)[source]¶

Muliply with values of other trace

(self *= other).Multiply values of

othertrace to the values ofself, where it intersects withother. This method does not change the extent ofself. IfinterpolateisTrue(the default), the values ofotherto be multiplied are interpolated at sampling instants ofself. Linear interpolation is performed. In this case the sampling rate ofothermust be equal to or lower than that ofself. IfinterpolateisFalse, the sampling rates of the two traces must match.

- set_codes(network=None, station=None, location=None, channel=None, extra=None)[source]¶

Set network, station, location, and channel codes.

- is_relevant(tmin, tmax, selector=None)[source]¶

Check if trace has overlap with a given time span and matches a condition callback. (internal use)

- append(data)[source]¶

Append data to the end of the trace.

To make this method efficient when successively very few or even single samples are appended, a larger grow buffer is allocated upon first invocation. The traces data is then changed to be a view into the currently filled portion of the grow buffer array.

- chop(tmin, tmax, inplace=True, include_last=False, snap=(<built-in function round>, <built-in function round>), want_incomplete=True)[source]¶

Cut the trace to given time span.

If the

inplaceargument is True (the default) the trace is cut in place, otherwise a new trace with the cut part is returned. By default, the indices where to start and end the trace data array are determined by rounding oftminandtmaxto sampling instances using Python’sround()function. This behaviour can be changed with thesnapargument, which takes a tuple of two functions (one for the lower and one for the upper end) to be used instead ofround(). The last sample is by default not included unlessinclude_lastis set to True. If the given time span exceeds the available time span of the trace, the available part is returned, unlesswant_incompleteis set to False - in that case, aNoDataexception is raised. This exception is always raised, when the requested time span does dot overlap with the trace’s time span.

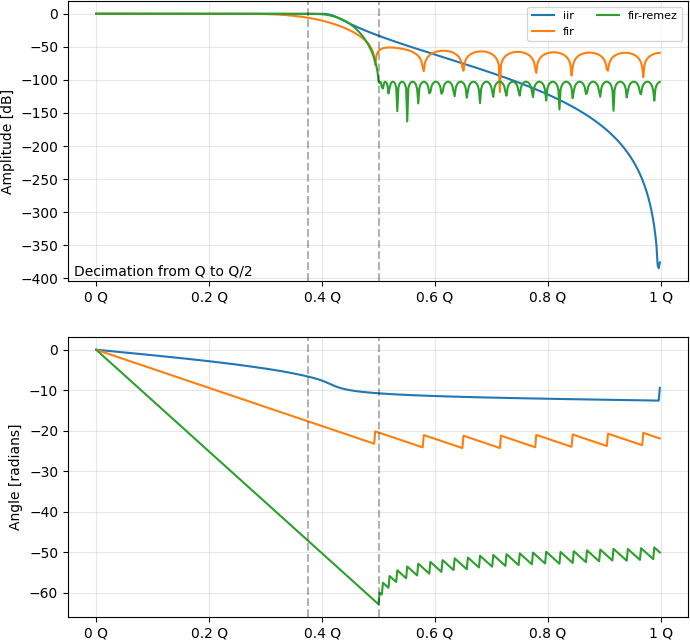

- downsample(ndecimate, snap=False, demean=False, ftype='fir-remez', cut=False)[source]¶

Downsample (decimate) trace by a given integer factor.

Antialiasing filter details:

iir: A Chebyshev type I digital filter of order 8 with maximum ripple of 0.05 dB in the passband.fir: A FIR filter using a Hamming window and 31 taps and a well-behaved phase response.fir-remez: A FIR filter based on the Remez exchange algorithm with45*ndecimatetaps and a cutoff at 75% Nyquist frequency.

Comparison of the digital filters:

See also

Trace.downsample_to().- Parameters:

ndecimate (int) – Decimation factor, avoid values larger than 8.

snap (bool) – Whether to put the new sampling instants closest to multiples of the sampling rate (according to absolute time).

demean (bool) – Whether to demean the signal before filtering.

ftype – Which FIR filter to use, choose from

'iir','fir','fir-remez'. Default is'fir-remez'.cut (bool) – Whether to cut off samples in the beginning of the trace which are polluted by artifacts of the anti-aliasing filter.

- downsample_to(deltat, snap=False, allow_upsample_max=1, demean=False, ftype='fir-remez', cut=False)[source]¶

Downsample to given sampling rate.

Tries to downsample the trace to a target sampling interval of

deltat. This runsdownsample()one or several times. Ifallow_upsample_maxis set to a value larger than 1, intermediate upsampling steps are allowed, in order to increase the number of possible downsampling ratios.If the requested ratio is not supported, an exception of type

pyrocko.util.UnavailableDecimationis raised.The downsampled trace will be shorter than the input trace because the anti-aliasing filter introduces edge effects. If cut is selected, additionally, polluted samples in the beginning of the trace are removed. The approximate amount of cutoff which will be produced by a given downsampling configuration can be estimated with

downsample_tpad().See also:

Trace.downsample().- Parameters:

deltat (float) – Desired sampling interval in [s].

allow_upsample_max (int) – Maximum allowed upsampling factor of the signal to achieve the desired sampling interval. Default is

1.snap (bool) – Whether to put the new sampling instants closest to multiples of the sampling rate (according to absolute time).

demean (bool) – Whether to demean the signal before filtering.

ftype – Which FIR filter to use, choose from

'iir','fir','fir-remez'. Default is'fir-remez'.cut – Whether to cut off samples in the beginning of the trace which are polluted by artifacts of the anti-aliasing filter.

- resample(deltat)[source]¶

Resample to given sampling rate

deltat.Resampling is performed in the frequency domain.

- stretch(tmin_new, tmax_new)[source]¶

Stretch signal while preserving sample rate using sinc interpolation.

- Parameters:

tmin_new – new time of first sample

tmax_new – new time of last sample

This method can be used to correct for a small linear time drift or to introduce sub-sample time shifts. The amount of stretching is limited to 10% by the implementation and is expected to be much smaller than that by the approximations used.

- nyquist_check(frequency, intro='Corner frequency', warn=True, raise_exception=False)[source]¶

Check if a given frequency is above the Nyquist frequency of the trace.

- Parameters:

intro – string used to introduce the warning/error message

warn – whether to emit a warning

raise_exception – whether to raise an

AboveNyquistexception.

- lowpass(order, corner, nyquist_warn=True, nyquist_exception=False, demean=True)[source]¶

Apply Butterworth lowpass to the trace.

- Parameters:

order – order of the filter

corner – corner frequency of the filter

Mean is removed before filtering.

- highpass(order, corner, nyquist_warn=True, nyquist_exception=False, demean=True)[source]¶

Apply butterworth highpass to the trace.

- Parameters:

order – order of the filter

corner – corner frequency of the filter

Mean is removed before filtering.

- bandpass(order, corner_hp, corner_lp, demean=True)[source]¶

Apply butterworth bandpass to the trace.

- Parameters:

order – order of the filter

corner_hp – lower corner frequency of the filter

corner_lp – upper corner frequency of the filter

Mean is removed before filtering.

- bandstop(order, corner_hp, corner_lp, demean=True)[source]¶

Apply bandstop (attenuates frequencies in band) to the trace.

- Parameters:

order – order of the filter

corner_hp – lower corner frequency of the filter

corner_lp – upper corner frequency of the filter

Mean is removed before filtering.

- envelope(inplace=True)[source]¶

Calculate the envelope of the trace.

- Parameters:

inplace – calculate envelope in place

The calculation follows:

where H is the Hilbert-Transform of the signal Y.

- taper(taperer, inplace=True, chop=False)[source]¶

Apply a

Taperto the trace.- Parameters:

taperer – instance of

Tapersubclassinplace – apply taper inplace

chop – if

True: exclude tapered parts from the resulting trace

- whiten(order=6)[source]¶

Whiten signal in time domain using autoregression and recursive filter.

- Parameters:

order – order of the autoregression process

- ampspec_whiten(width, td_taper='auto', fd_taper='auto', pad_to_pow2=True, demean=True)[source]¶

Whiten signal via frequency domain using moving average on amplitude spectra.

- Parameters:

width – width of smoothing kernel [Hz]

td_taper – time domain taper, object of type

TaperorNoneor'auto'.fd_taper – frequency domain taper, object of type

TaperorNoneor'auto'.pad_to_pow2 – whether to pad the signal with zeros up to a length of 2^n

demean – whether to demean the signal before tapering

The signal is first demeaned and then tapered using

td_taper. Then, the spectrum is calculated and inversely weighted with a smoothed version of its amplitude spectrum. A moving average is used for the smoothing. The smoothed spectrum is then tapered usingfd_taper. Finally, the smoothed and tapered spectrum is back-transformed into the time domain.If

td_taperis set to'auto',CosFader(1.0/width)is used. Iffd_taperis set to'auto',CosFader(width)is used.

- snap(inplace=True, interpolate=False)[source]¶

Shift trace samples to nearest even multiples of the sampling rate.

- Parameters:

inplace – (boolean) snap traces inplace

If

inplaceisFalseand the difference of tmin and tmax of both, the snapped and the original trace is smaller than 0.01 x deltat,snap()returns the unsnapped instance of the original trace.

- sta_lta_centered(tshort, tlong, quad=True, scalingmethod=1)[source]¶

Run special STA/LTA filter where the short time window is centered on the long time window.

- Parameters:

tshort – length of short time window in [s]

tlong – length of long time window in [s]

quad – whether to square the data prior to applying the STA/LTA filter

scalingmethod – integer key to select how output values are scaled / normalized (

1,2, or3)

Scalingmethod

Implementation

Range

1As/Al* Ts/Tl

[0,1]

2(As/Al - 1) / (Tl/Ts - 1)

[-Ts/Tl,1]

3Like

2but clipping range at zero[0,1]

- sta_lta_right(tshort, tlong, quad=True, scalingmethod=1)[source]¶

Run special STA/LTA filter where the short time window is overlapping with the last part of the long time window.

- Parameters:

tshort – length of short time window in [s]

tlong – length of long time window in [s]

quad – whether to square the data prior to applying the STA/LTA filter

scalingmethod – integer key to select how output values are scaled / normalized (

1,2, or3)

Scalingmethod

Implementation

Range

1As/Al* Ts/Tl

[0,1]

2(As/Al - 1) / (Tl/Ts - 1)

[-Ts/Tl,1]

3Like

2but clipping range at zero[0,1]

With

scalingmethod=1, the values produced by this variant of the STA/LTA are equivalent towhere

are the input samples,

are the number of samples in the short time window and

are the number of samples in the long time window.

- peaks(threshold, tsearch, deadtime=False, nblock_duration_detection=100)[source]¶

Detect peaks above a given threshold (method 1).

From every instant, where the signal rises above

threshold, a time length oftsearchseconds is searched for a maximum. A list with tuples (time, value) for each detected peak is returned. Thedeadtimeargument turns on a special deadtime duration detection algorithm useful in combination with recursive STA/LTA filters.

- peaks2(threshold, tsearch)[source]¶

Detect peaks above a given threshold (method 2).

This variant of peak detection is a bit more robust (and slower) than the one implemented in

Trace.peaks(). First all samples witha[i-1] < a[i] > a[i+1]are masked as potential peaks. From these, iteratively the one with the maximum amplitudea[j]and timet[j]is choosen and potential peaks withint[j] - tsearch, t[j] + tsearchare discarded. The algorithm stops, whena[j] < thresholdor when no more potential peaks are left.

- extend(tmin=None, tmax=None, fillmethod='zeros')[source]¶

Extend trace to given span.

- Parameters:

tmin – begin time of new span

tmax – end time of new span

fillmethod –

'zeros','repeat','mean', or'median'

- transfer(tfade=0.0, freqlimits=None, transfer_function=None, cut_off_fading=True, demean=True, invert=False)[source]¶

Return new trace with transfer function applied (convolution).

- Parameters:

tfade – rise/fall time in seconds of taper applied in timedomain at both ends of trace.

freqlimits – 4-tuple with corner frequencies in Hz.

transfer_function – FrequencyResponse object; must provide a method ‘evaluate(freqs)’, which returns the transfer function coefficients at the frequencies ‘freqs’.

cut_off_fading – whether to cut off rise/fall interval in output trace.

demean – remove mean before applying transfer function

invert – set to True to do a deconvolution

- differentiate(n=1, order=4, inplace=True)[source]¶

Approximate first or second derivative of the trace.

- Parameters:

n – 1 for first derivative, 2 for second

order – order of the approximation 2 and 4 are supported

inplace – if

Truethe trace is differentiated in place, otherwise a new trace object with the derivative is returned.

Raises

ValueErrorfor unsupported n or order.See

diff_fd()for implementation details.

- misfit(candidate, setup, nocache=False, debug=False)[source]¶

Calculate misfit and normalization factor against candidate trace.

- Parameters:

candidate –

Traceobjectsetup –

MisfitSetupobject

- Returns:

tuple

(m, n), where m is the misfit value and n is the normalization divisor

If the sampling rates of

selfandcandidatediffer, the trace with the higher sampling rate will be downsampled.

- spectrum(pad_to_pow2=False, tfade=None, ntrans_min=None)[source]¶

Get FFT spectrum of trace.

- Parameters:

pad_to_pow2 – whether to zero-pad the data to next larger power-of-two length

tfade –

Noneor a time length in seconds, to apply cosine shaped tapers to both

- Returns:

a tuple with (frequencies, values)

- fill_template(template, **additional)[source]¶

Fill string template with trace metadata.

Uses normal python ‘%(placeholder)s’ string templates. The following placeholders are considered:

network,station,location,channel,tmin(time of first sample),tmax(time of last sample),tmin_ms,tmax_ms,tmin_us,tmax_us,tmin_year,tmax_year,tmin_month,tmax_month,tmin_day,tmax_day,julianday. The variants ending with'_ms'include milliseconds, those with'_us'include microseconds, those with'_year'contain only the year.

- plot()[source]¶

Show trace with matplotlib.

See also:

Trace.snuffle().

- snuffle(**kwargs)[source]¶

Show trace in a snuffler window.

- Parameters:

stations – list of

pyrocko.model.station.Stationobjects orNoneevents – list of

pyrocko.model.event.Eventobjects orNonemarkers – list of

pyrocko.gui.snuffler.marker.Markerobjects orNonentracks – float, number of tracks to be shown initially (default: 12)

follow – time interval (in seconds) for real time follow mode or

Nonecontrols – bool, whether to show the main controls (default:

True)opengl – bool, whether to use opengl (default:

False)

- snuffle(traces, **kwargs)[source]¶

Show traces in a snuffler window.

- Parameters:

stations – list of

pyrocko.model.station.Stationobjects orNoneevents – list of

pyrocko.model.event.Eventobjects orNonemarkers – list of

pyrocko.gui.snuffler.marker.Markerobjects orNonentracks – int, number of tracks to be shown initially (default: 12)

follow – time interval (in seconds) for real time follow mode or

Nonecontrols – bool, whether to show the main controls (default:

True)opengl – bool, whether to use opengl (default:

False)

- downsample_tpad(deltat_in, deltat_out, allow_upsample_max=1, ftype='fir-remez')[source]¶

Get approximate amount of cutoff which will be produced by downsampling.

The

Trace.downsample_to()method removes some samples at the beginning and end of the trace which is downsampled. This function estimates the approximate length [s] which will be cut off for a given set of parameters supplied toTrace.downsample_to().- Parameters:

- Returns:

Approximate length [s] which will be cut off.

See

Trace.downsample_to()for details.

- get_traces_data_as_array(traces)[source]¶

Merge data samples from multiple traces into a 2D array.

- Parameters:

traces (list of

pyrocko.Traceobjects) – Input waveforms.- Raises:

IncompatibleTracesif traces have different time span, sample rate or data type, or if traces is an empty list.- Returns:

2D array as

data[itrace, isample].- Return type:

- make_traces_compatible(traces, dtype=None, deltat=None, enforce_global_snap=True, warn_snap=False)[source]¶

Homogenize sampling rate, time span, sampling instants, and data type.

This function takes a group of traces and tries to make them compatible in terms of data type and sampling rate, time span, and sampling instants of time.

If necessary, traces are (in order):

casted to the same data type.

downsampled to a common sampling rate, using decimation cascades.

resampled to common sampling instants in time, using Sinc interpolation.

cut to the same time span. The longest time span covered by all traces is used.

- Parameters:

dtype (

numpy.dtype) – Force traces to be casted to the given data type. If not specified, the traces are cast tofloat.deltat (float) – Sampling interval [s]. If not specified, the longest sampling interval among the input traces is chosen.

enforce_global_snap (bool) – If

True, choose sampling instants to be even multiples of the sampling rate in system time. When set toFalsetraces are still resampled to common time instants (if necessary), but they may be offset to the system time sampling rate multiples.warn_snap (bool) – If set to

Truewarn, when resampling has to be performed.

- exception IncompatibleTraces[source]¶

Bases:

ExceptionRaised when traces have incompatible sampling rate, time span or data type.

- exception InfiniteResponse[source]¶

Bases:

ExceptionThis exception is raised by

Traceoperations when deconvolution of a frequency response (instrument response transfer function) would result in a division by zero.

- exception MisalignedTraces[source]¶

Bases:

ExceptionThis exception is raised by some

Traceoperations when tmin, tmax or number of samples do not match.

- exception OverlappingTraces[source]¶

Bases:

ExceptionThis exception is raised by some

Traceoperations when traces overlap but should not.

- exception NoData[source]¶

Bases:

ExceptionThis exception is raised by some

Traceoperations when no or not enough data is available.

- exception AboveNyquist[source]¶

Bases:

ExceptionThis exception is raised by some

Traceoperations when given frequencies are above the Nyquist frequency.

- exception TraceTooShort[source]¶

Bases:

ExceptionThis exception is raised by some

Traceoperations when the trace is too short.

- minmax(traces, key=None, mode='minmax', outer_mode='minmax')[source]¶

Get data range given traces grouped by selected pattern.

- Parameters:

key – a callable which takes as single argument a trace and returns a key for the grouping of the results. If this is

None, the default,lambda tr: (tr.network, tr.station, tr.location, tr.channel)is used.mode –

'minmax'or floating point number. If this is'minmax', minimum and maximum of the traces are used, if it is a number, mean +- standard deviation timesmodeis used.

- param outer_mode:

'minmax'to use mininum and maximum of the single-trace ranges, or

'robust'to use the interval to discard 10% extreme values on either end.

- Returns:

a dict with the combined data ranges.

Examples:

ranges = minmax(traces, lambda tr: tr.channel) print ranges['N'] # print min & max of all traces with channel == 'N' print ranges['E'] # print min & max of all traces with channel == 'E' ranges = minmax(traces, lambda tr: (tr.network, tr.station)) print ranges['GR', 'HAM3'] # print min & max of all traces with # network == 'GR' and station == 'HAM3' ranges = minmax(traces, lambda tr: None) print ranges[None] # prints min & max of all traces

- minmaxtime(traces, key=None)[source]¶

Get time range given traces grouped by selected pattern.

- Parameters:

key – a callable which takes as single argument a trace and returns a key for the grouping of the results. If this is

None, the default,lambda tr: (tr.network, tr.station, tr.location, tr.channel)is used.- Returns:

a dict with the combined data ranges.

- degapper(traces, maxgap=5, fillmethod='interpolate', deoverlap='use_second', maxlap=None)[source]¶

Try to connect traces and remove gaps.

This method will combine adjacent traces, which match in their network, station, location and channel attributes. Overlapping parts are handled according to the

deoverlapargument.- Parameters:

traces – input traces, must be sorted by their full_id attribute.

maxgap – maximum number of samples to interpolate.

fillmethod – what to put into the gaps: ‘interpolate’ or ‘zeros’.

deoverlap – how to handle overlaps: ‘use_second’ to use data from second trace (default), ‘use_first’ to use data from first trace, ‘crossfade_cos’ to crossfade with cosine taper, ‘add’ to add amplitude values.

maxlap – maximum number of samples of overlap which are removed

- Returns:

list of traces

- rotate(traces, azimuth, in_channels, out_channels)[source]¶

2D rotation of traces.

- Parameters:

traces – list of input traces

azimuth – difference of the azimuths of the component directions (azimuth of out_channels[0]) - (azimuth of in_channels[0])

in_channels – names of the input channels (e.g. ‘N’, ‘E’)

out_channels – names of the output channels (e.g. ‘R’, ‘T’)

- Returns:

list of rotated traces

- rotate_to_rt(n, e, source, receiver, out_channels=('R', 'T'))[source]¶

Rotate traces from NE to RT system.

- Parameters:

n (

Trace) – North trace.e (

Trace) – East trace.source (

pyrocko.gf.seismosizer.Source) – Source of the recorded signal.receiver (

pyrocko.model.location.Location) – Receiver of the recorded signal.out_channels – Channel codes of the output channels (radial, transversal). Default is (‘R’, ‘T’).

- :type out_channels

optional, tuple[str, str]

- rotate_to_lqt(traces, backazimuth, incidence, in_channels, out_channels=('L', 'Q', 'T'))[source]¶

Rotate traces from ZNE to LQT system.

- Parameters:

traces – list of traces in arbitrary order

backazimuth – backazimuth in degrees clockwise from north

incidence – incidence angle in degrees from vertical

in_channels – input channel names

out_channels – output channel names (default: (‘L’, ‘Q’, ‘T’))

- Returns:

list of transformed traces

- project(traces, matrix, in_channels, out_channels)[source]¶

Affine transform of three-component traces.

Compute matrix-vector product of three-component traces, to e.g. rotate traces into a different basis. The traces are distinguished and ordered by their channel attribute. The tranform is applied to overlapping parts of any appropriate combinations of the input traces. This should allow this function to be robust with data gaps. It also tries to apply the tranformation to subsets of the channels, if this is possible, so that, if for example a vertical compontent is missing, horizontal components can still be rotated.

- Parameters:

traces – list of traces in arbitrary order

matrix – tranformation matrix

in_channels – input channel names

out_channels – output channel names

- Returns:

list of transformed traces

- project_dependencies(matrix, in_channels, out_channels)[source]¶

Figure out what dependencies project() would produce.

- correlate(a, b, mode='valid', normalization=None, use_fft=False)[source]¶

Cross correlation of two traces.

- Parameters:

a,b – input traces

mode –

'valid','full', or'same'normalization –

'normal','gliding', orNoneuse_fft – bool, whether to do cross correlation in spectral domain

- Returns:

trace containing cross correlation coefficients

This function computes the cross correlation between two traces. It evaluates the discrete equivalent of

where the star denotes complex conjugate. Note, that the arguments here are swapped when compared with the

numpy.correlate()function, which is internally called. This function should be safe even with older versions of NumPy, where the correlate function has some problems.A trace containing the cross correlation coefficients is returned. The time information of the output trace is set so that the returned cross correlation can be viewed directly as a function of time lag.

Example:

# align two traces a and b containing a time shifted similar signal: c = pyrocko.trace.correlate(a,b) t, coef = c.max() # get time and value of maximum b.shift(-t) # align b with a

- same_sampling_rate(a, b, eps=1e-06)[source]¶

Check if two traces have the same sampling rate.

- Parameters:

a,b – input traces

eps – relative tolerance

- fix_deltat_rounding_errors(deltat)[source]¶

Try to undo sampling rate rounding errors.

Fix rounding errors of sampling intervals when these are read from single precision floating point values.

Assumes that the true sampling rate or sampling interval was an integer value. No correction will be applied if this would change the sampling rate by more than 0.001%.

- merge_codes(a, b, sep='-')[source]¶

Merge network-station-location-channel codes of a pair of traces.

- class CosTaper(a, b, c, d)[source]¶

Bases:

TaperCosine Taper.

- Parameters:

a – start of fading in

b – end of fading in

c – start of fading out

d – end of fading out

- ♦ a¶

float

- ♦ b¶

float

- ♦ c¶

float

- ♦ d¶

float

- class CosFader(xfade=None, xfrac=None)[source]¶

Bases:

TaperCosine Fader.

- Parameters:

xfade – fade in and fade out time in seconds (optional)

xfrac – fade in and fade out as fraction between 0. and 1. (optional)

Only one argument can be set. The other should to be

None.- ♦ xfade¶

float, optional

- ♦ xfrac¶

float, optional

- numpy_correlate_fixed(a, b, mode='valid', use_fft=False)[source]¶

Call

numpy.correlate()with fixes.c[k] = sum_i a[i+k] * conj(b[i])

Note that the result produced by newer numpy.correlate is always flipped with respect to the formula given in its documentation (if ascending k assumed for the output).

- numpy_correlate_emulate(a, b, mode='valid')[source]¶

Slow version of

numpy.correlate()for comparison.

- numpy_correlate_lag_range(a, b, mode='valid', use_fft=False)[source]¶

Get range of lags for which

numpy.correlate()produces values.

- autocorr(x, nshifts)[source]¶

Compute biased estimate of the first autocorrelation coefficients.

- Parameters:

x – input array

nshifts – number of coefficients to calculate

- yulewalker(x, order)[source]¶

Compute autoregression coefficients using Yule-Walker method.

- Parameters:

x – input array

order – number of coefficients to produce

A biased estimate of the autocorrelation is used. The Yule-Walker equations are solved by

numpy.linalg.inv()instead of Levinson-Durbin recursion which is normally used.

- hilbert(x, N=None)[source]¶

Return the hilbert transform of x of length N.

(from scipy.signal, but changed to use fft and ifft from numpy.fft)

- co_lfilter(*args, **kwargs)[source]¶

Successively filter broken continuous trace data (coroutine).

Create coroutine which takes

Traceobjects, filters their data throughscipy.signal.lfilter()and sends newTraceobjects containing the filtered data to target. This is useful, if one wants to filter a long continuous time series, which is split into many successive traces without producing filter artifacts at trace boundaries.Filter states are kept per channel, specifically, for each (network, station, location, channel) combination occuring in the input traces, a separate state is created and maintained. This makes it possible to filter multichannel or multistation data with only one

co_lfilter()instance.Filter state is reset, when gaps occur.

Use it like this:

from pyrocko.trace import co_lfilter, co_list_append filtered_traces = [] pipe = co_lfilter(co_list_append(filtered_traces), a, b) for trace in traces: pipe.send(trace) pipe.close()

- co_downsample(target, q, n=None, ftype='fir')[source]¶

Successively downsample broken continuous trace data (coroutine).

Create coroutine which takes

Traceobjects, downsamples their data and sends newTraceobjects containing the downsampled data to target. This is useful, if one wants to downsample a long continuous time series, which is split into many successive traces without producing filter artifacts and gaps at trace boundaries.Filter states are kept per channel, specifically, for each (network, station, location, channel) combination occuring in the input traces, a separate state is created and maintained. This makes it possible to filter multichannel or multistation data with only one

co_lfilter()instance.Filter state is reset, when gaps occur. The sampling instances are choosen so that they occur at (or as close as possible) to even multiples of the sampling interval of the downsampled trace (based on system time).

- class DomainChoice(...) str[source]¶

Bases:

StringChoiceAny

strout of['time_domain', 'frequency_domain', 'envelope', 'absolute', 'cc_max_norm'].

- class MisfitSetup(**kwargs)[source]¶

Bases:

ObjectContains misfit setup to be used in

Trace.misfit()- Parameters:

description – Description of the setup

norm – L-norm classifier

taper – Object of

Taperfilter – Object of

FrequencyResponsedomain – [‘time_domain’, ‘frequency_domain’, ‘envelope’, ‘absolute’, ‘cc_max_norm’]

Can be dumped to a yaml file.

- ♦ description¶

str, optional

- ♦ norm¶

int

- ♦ filter¶

pyrocko.response.FrequencyResponse, optional

- ♦ domain¶

str(DomainChoice), default:'time_domain'

- equalize_sampling_rates(trace_1, trace_2)[source]¶

Equalize sampling rates of two traces (reduce higher sampling rate to lower).

Returns a copy of the resampled trace if resampling is needed.

- Lx_norm(u, v, norm=2)[source]¶

Calculate the misfit denominator m and the normalization divisor n according to norm.

The normalization divisor n is calculated from

v.- Parameters:

u –

numpy.ndarrayv –

numpy.ndarraynorm – (default = 2)

uandvmust be of same size.